ARubi: Programming Ubiquitous Computing Environments Through the Lens of Augmented Reality

One of the remaining challenges of ubiquitous computing today is the availability of programming tools for non-academic users, including designers and people with basic programming experience, to program ubiquitous experience in the environment. Inspired by the vision and challenges discussed by Dr. Abowd in his paper What next, ubicomp? Celebrating an Intellectual Disappearing Act, we built ARubi, a system with mixed augmented reality (AR) and graphical user interface (GUI) designed for prototyping context-aware applications. We describe a back-end software and hardware infrastructure that supports the ARubi system. The ultimate goal of this work is to enable end-users to build, debug, and test ubiquitous computing applications in-situ and visually without writing code. We present an overview of the ARubi interface, the system infrastructure, and a motivating scenario based in a classroom setting.

System Design

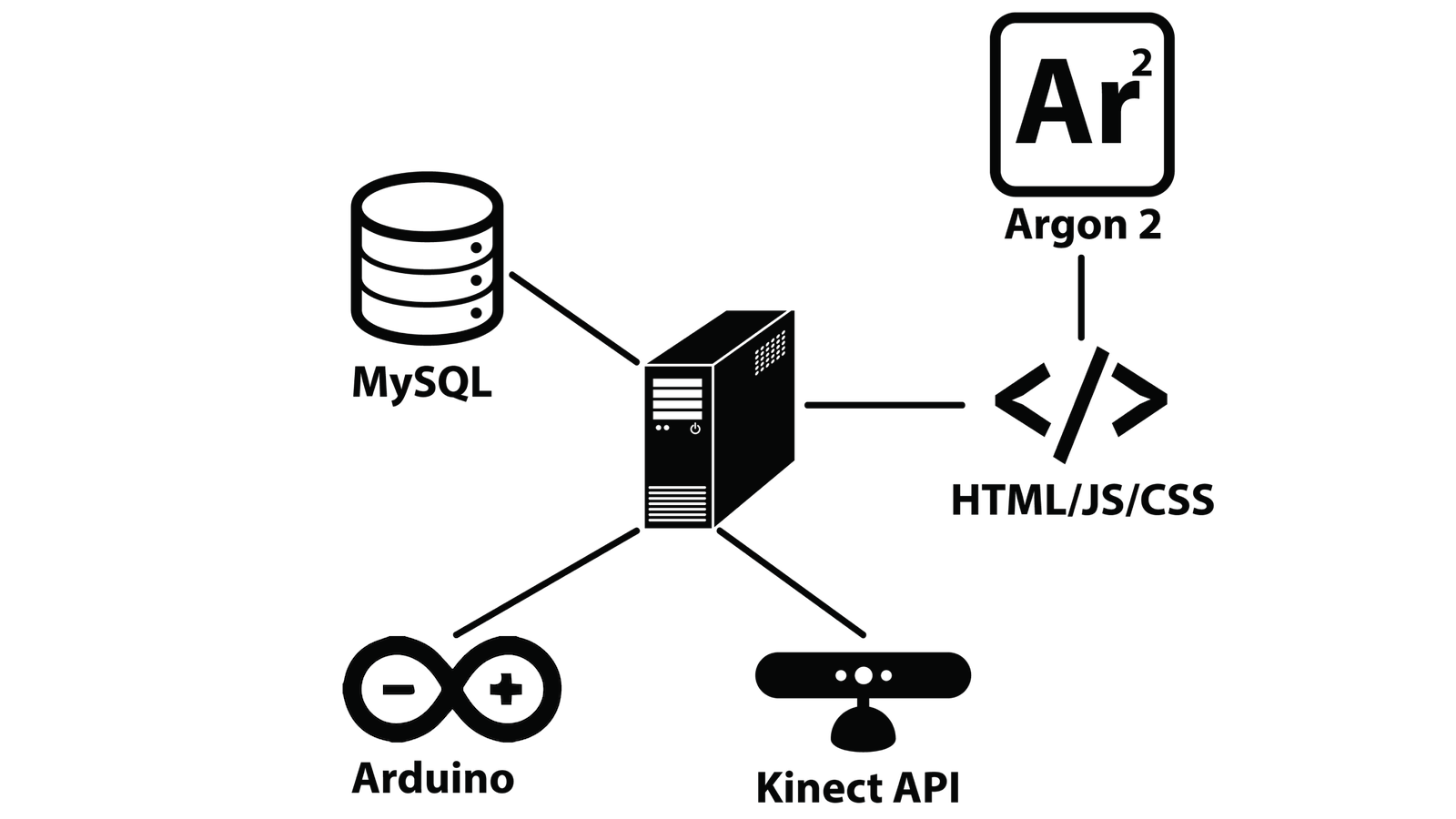

The system infrastructure consists of a central server that has a database of input and output devices, and rules used to connect them together. The Arubi augmented reality interface is built using web technologies like HTML and Javascript on Argon 2 (an Augmented Reality Browser), to take advantage of AR and touch input on a tablet. it detects devices using AR markers in the environment and communicates with the database to retrieve device information. The interface also allows users to view existing rules, create new events and reactions, and automatically store them in the database.

4 Steps to Programming Your Environment

- Discover devices and services in the environment.

- Select devices to save events and reactions between them.

- Draw connections between inputs and outputs. Rule gets saved automatically.

- Debug the saved rules with visual feedback.

Report Link

Collaborators Gabriel Reyes, Haozhe Li